WRITTEN BY

Amit Thakkar,

Vice President, Product Management

AI has demonstrated the potential to revolutionize industries by leveraging the technology to enhance productivity, efficiency and task automation. While Generative AI was focused on content creation, the era of Agentic AI focuses on autonomous actions

and decision-making. Both forms of AI are constantly learning from the environment and improving the accuracy of their output. Machine learning in AI requires processing of vast amounts of data to create AI models that are pre-trained in AI factories.

AI factories are comprised of massive data centers containing accelerated computing servers, storage and high-performance networking. Inferencing, the application of these pre-trained models, can be centralized or distributed at the enterprise edge.

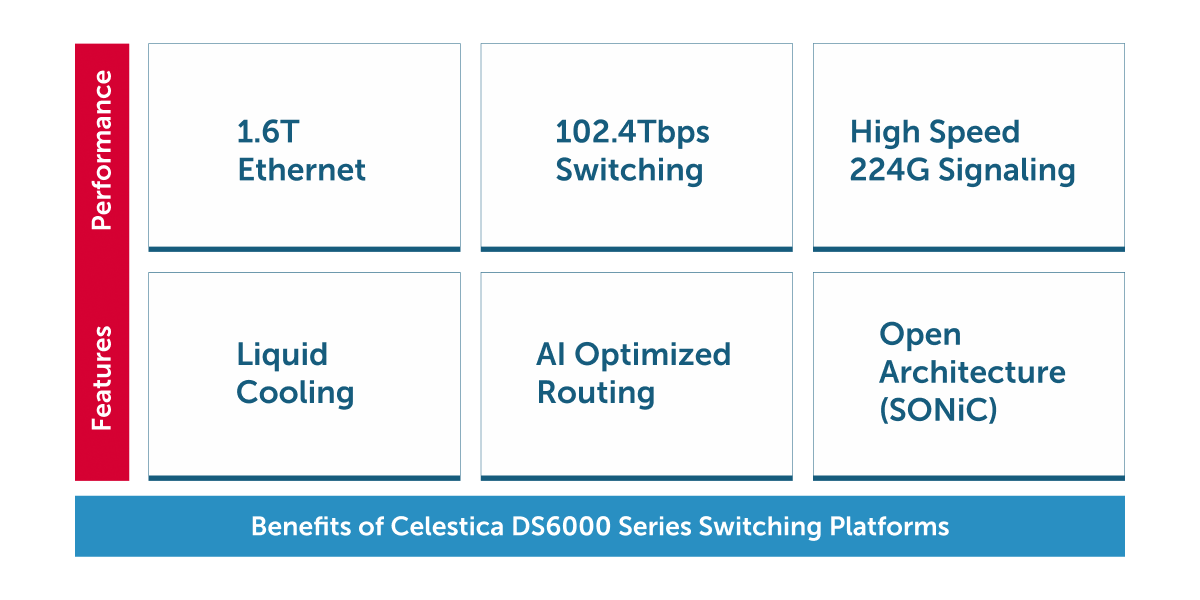

A high-performance network fabric is essential to interconnect accelerated computing nodes within these AI data centers. The arrival of 1.6TbE Ethernet significantly enhances the performance of the fabric for AI training and inferencing operations.

Next Generation Ethernet for AI

As AI progresses to its next phase, the creation of new foundational models requires even higher switching bandwidths and higher speed Ethernet interfaces, like 1.6TbE, to ensure that communication demands for AI computation are fully met. Higher bandwidth

switch systems ensure that expensive GPU-based accelerated computing nodes are never starved for bandwidth and the switch fabric provides the most efficient transport for AI. Introduction of 1.6TbE technology in data centers will enable the transformation

to faster speeds and higher bandwidth inside the data center. It will also enable transport of large flows (commonly referred to as elephant flows) with optimized routing, which are characteristic of AI training traffic. The next generation of 1.6TbE

Ethernet switching will be realized in systems with an unprecedented capacity of 102.4 Terabit/sec of switching capacity.

High-Performance Solutions for AI Applications

Celestica’s DS6000 and DS6001 are next-generation, AI-optimized data center switching systems based on the Broadcom Tomahawk 6 switch chip. Broadcom Tomahawk6 silicon is based on the latest 3nm semiconductor process technology, providing 102.4Tbps switching capacity in a single

device, leveraging state-of-the-art 224Gbps Serdes technology. Celestica’s DS6000 series delivers 102.4T switching capacity with 1.6TbE, 128 x 800GbE, 256 x 400GbE, and 512 x 200GbE ports, providing lower-power, energy-efficient switch platforms with

options for air-cooled and hybrid air and liquid cooled versions. Celestica’s advanced liquid cooling technology, applied in conjunction with linear pluggable optics (LPO), will improve power efficiencies in AI compute racks.

New Features to Accelerate AI Data Center Deployment

DS6000/DS6001 can be positioned in AI backend networks for scale-up as well as scale-out networking. Both platforms enable high-scale networking fabrics for AI training and inferencing for frontier AI models developed by Cloud Service Providers, as well

as domain-adapted models for Enterprise. Cognitive Routing features such as advanced telemetry, dynamic congestion control and global load balancing provide the most efficient transport for AI traffic flows. Celestica provides software capabilities

for AI transport in the data center through the SONiC network operating system in partnership with the open-source community. In addition, Celestica Solutions for SONiC offers an AI-ready

feature set in Celestica SONiC plus network operating system that is based on the open-source SONiC operating system.

DS6000 Data Center Switch

DS6000 Data Center Switch

Solutions for the AI Era

1.6TbE Ethernet marks the beginning of a new era in Networking for AI. It simplifies AI fabric design, provides an efficient transport for AI traffic, and meets the scaling needs for the modern AI data center. Systems innovations in liquid cooling and

lower power optics significantly contribute to a reduced carbon footprint and sustainable data centers. Additionally, future integration of co-packaged optics (CPO) is transforming the landscape of data center and AI networking by delivering unprecedented

levels of bandwidth, energy efficiency, and scalability. Ultimately, 1.6T Ethernet-based switch systems, combined with open software innovations, are foundational elements of data center infrastructure for modern AI workloads. Visit our website to learn more about the Celestica DS6000 series of 1.6TbE platforms.