Why Hyperscalers Choose Celestica AI Fabric for Exa-scale Performance

We are in a new age of AI, with trillion-parameter models and real-time generative AI becoming the norm. For the world's hyperscalers, the race is on to build the massive, efficient, global infrastructure needed to power it all.

In this race, every microsecond of delay, every watt of power used, and every percentage point of GPU performance counts. These aren't just numbers—they directly impact how fast AI models can be trained, how much they cost to operate, and who gets the competitive edge.

While many vendors focus solely on upgrading bandwidth, Celestica offers something much bigger: a comprehensive, system-level AI fabric. We seamlessly integrate compute, accelerators, storage, and networking into one powerful, scalable platform.

We recently introduced our new family of 1.6TbE data center switches, the DS6000 and DS6001. Not just another piece of hardware. They are the blueprint for building the next generation of hyperscale AI infrastructure.

Scaling AI Infrastructure: From Rack to Global Deployment

When we think about what hyperscalers are building today, it’s infrastructure that spans:

- Thousands of server racks

- Millions of GPUs

- Multiple continents and availability zones

- Petabytes of data for models with billions of parameters

To operate at this level, you need more than just raw speed. You need:

- An AI fabric that cuts latency to a minimum and keeps every accelerator working at its peak

- A system where compute, storage, and networking are perfectly in sync

- Something simple to set up, manage, and fix when things go wrong

- A platform that’s ready for the next wave of AI workloads and standards

Celestica’s DS6000 was built for exactly this—delivering 1.6T performance that’s deeply integrated into the entire AI stack.

Scale-Up: Densifying XPU Racks with Intra-Rack Efficiency

It all starts inside the server rack, where XPUs like for instance GPUs need to communicate instantly, with zero bottlenecks. The DS6000 lets you pack more power into each rack than ever before.

It connects up to 64 GPUs per rack, so they can all talk at once without hitting a traffic jam. With the lower latency per hop, we eliminate the east-west congestion that slows things down. It’s designed to support the newest multi-link GPU fabrics and native 1.6TbE network cards, making it perfect for crucial tasks like all-reduce and gradient synchronization.

The result: Your accelerators run at peak efficiency. That means less idle time, faster training, and quicker time to a finished model.

Scale-Out: Flattening Multi-Rack AI Clusters

As you add more racks and pods, legacy networks (with 3 or 4 tiers) become slow, complex, and overloaded. The DS6000 solves this by creating a massive, flat, 1.6T scale-out fabric:

- It enables simple 2-tier networks that can replace clunky legacy designs.

- It slashes end-to-end latency

- It doubles the bisectional bandwidth, which is critical for workloads where everything needs to sync up at once.

- It reduces the number of switches you need by about 80% compared to an 800G setup.

When you're running over 10,000 GPUs, communication delays can eat up half your training time. The DS6000 cuts down that waste.

Building Global AI Infrastructure

For truly global AI, you need a network fabric that stretches across regions and zones, on a global scale, without missing a beat. The DS6000 is built for this scale.

It simplifies routing, which means you get system information faster and can recover from faults in record time. Every part of the system—from the ASIC to the optics—is optimized to work together seamlessly. This creates a WAN-aware AI fabric that maintains peak performance, no matter the distance.

Even a 2–3% improvement in GPU utilization can translate into millions of dollars in annual savings.

The DS6000 and DS6001 are also designed to support Data Center Interconnect (DCI) optics like future 1.6T-ZR/ZR+ coherent optics modules.

Scaling the Future of AI: Strategic Deployment at Exa-Scale

Across the globe, leading hyperscalers, service providers, and OEMs are choosing Celestica to design their next-generation AI infrastructure. These are deployments spanning thousands of racks, a tremendous number of GPUs, and multiple cloud regions. At the core of it all is Celestica’s DS6000 platform, powered by our 102.4T switching architecture designed for exa-scale performance.

This is about more than just bandwidth. It's about building an intelligent fabric that can

- Build multi-data center networks that span the globe with predictable performance

- Help models converge faster with ultra-low switching latency and non-blocking designs

- Optimize power and space, delivering major cost savings in dense AI clusters

- Provide a smooth transition to 224G NICs and the trillion-parameter models of tomorrow

- Drive down the total cost of ownership (TCO) by simplifying the network, streamlining operations, and getting more out of every GPU

- Maximize GPU utilization across AI, high-performance computing (HPC), and cloud-native workloads

Celestica’s system-level approach turns a data center switch into a strategic asset, empowering our partners to build scalable, resilient, and future-proof AI fabrics for their multi-cloud worlds.

Future-Proofing AI Infrastructure: Built for Today, Ready for Tomorrow

The DS6000 is designed to evolve with the AI landscape:

- It's ready for the next wave of networking hardware based on 224G serdes technology

- It offers flexible connections: 4x400G, 2x800G, or 1x1.6T

- It ensures a seamless migration to next-gen servers—no forklift upgrades required

- It applies to AI fabrics for trillion-parameter models, multi-modal AI, and real-time inference

Architecture Deep Dive: Understanding 1.6T Performance

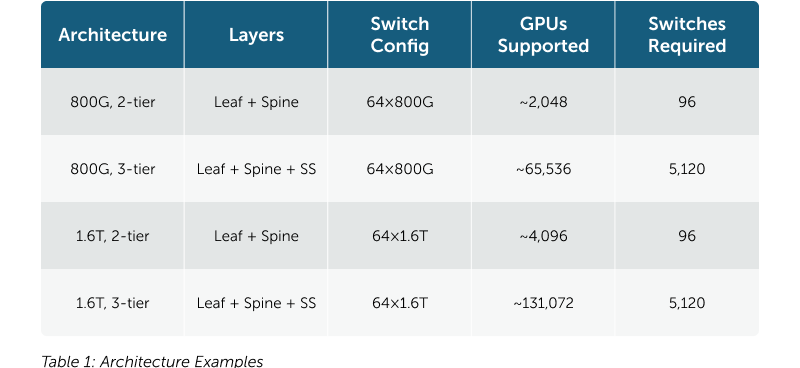

Key Insight: The takeaway is simple. With 1.6T, you can support twice the number of GPUs using the same simple 2-tier AI fabric, all at full, non-blocking bandwidth.

Assumptions:

- Each 64-port switch delivers non-blocking bandwidth per GPU (800G per GPU), with port counts scaled to support full GPU connectivity at the specified tier.

- With the assumptions laid out in Table 1, there’s a significant increase (x2) in the total number of GPUs that can be supported with 1.6T-based 102.4Tbps switches.

Why Cloud-Service Providers, Hyperscalers & OEMs Partner with Celestica for AI

Celestica operates as a foundational engineering partner for the world’s leading cloud, AI, and storage providers, enabling them to design and deploy fully integrated, high-performance systems at global scale.

- Celestica’s scalable and flexible design allows for custom branding and firmware

- We provide rack scale integration for next-gen AI-servers with accelerators, networking and AI storage

- Celestica’s proven global manufacturing and logistics mean you can deploy hardware rapidly, anywhere

- We build deep co-engineering partnerships with our hyperscaler customers

Cloud service providers (CSPs) and OEMs work with us to deliver unique AI infrastructure solutions that go far beyond a simple piece of hardware. Celestica Solutions for SONiC is a key differentiator for our customers.

Full SONiC Value Proposition for Open Networking

Celestica’s commitment to open networking with SONiC is demonstrated through our full support for SONiC (Software for Open Networking in the Cloud). This provides Celestica’s partners the ultimate flexibility to deploy an open-network stack, avoiding vendor lock-in. With SONiC, hyperscalers and CSPs, for instance, can develop features, streamline automation, and integrate seamlessly with their existing operational tools. This open approach allows them to control their own roadmap and build a truly scalable, high-performance AI fabric.

- Open Networking, Elevated: Celestica’s DS6000 delivers full SONiC support, empowering hyperscalers to deploy open networks with enterprise-grade reliability and control.

- Accelerated Time-to-Insight: With advanced SONiC telemetry, cloud providers can gain actionable insights into GPU utilization, congestion hotspots, and system health, which provides accelerated driving models in terms of convergence and smarter scaling decisions.

- AI Networking Infrastructure with Advanced Protocol Suite: Celestica Solutions for SONiC implementation supports VXLAN EVPNs, MP-BGP, ECMP, OSPFv2, Priority Flow Control, RoCEv2, etc., enabling scalable, programmable data center fabrics.

Celestica Powers the Future of AI Infrastructures – Key Takeaways

Celestica’s switching platforms represent a fundamental shift in how hyperscalers and OEMs build their AI infrastructure. With Celestica, you can:

- Collapse network tiers and simplify your architecture

- Scale your clusters with fewer devices

- Maximize the ROI on every single GPU

- Future-proof your investment for the next wave of AI

Whether you're packing more power into a single rack or managing clusters across the world, Celestica provides the performance, efficiency, and scalability to power the future of intelligence. Learn more about how the Celestica DS6000 and DS6001 can transform your network.

Read our whitepaper From 800G to 1.6T:

Re-Architecting

Hyperscale Networks for

Next-Generation AI Clusters, to learn more about the impacts and benefits of 1.6T Ethernet Networking.